Introduction

As robots tackle increasingly more complex tasks, it becomes impractical, dangerous, or impossible to use hardware prototypes to develop the bot. Increasingly, roboticists are turning to simulation to get valuable data for designing the hardware and software components for their robots, as well as for testing and validating their control algorithms. Simulation comes with the advantages of being able to scale to many parallel processes, as well as being cheap to run, however it comes with a steep up-front development cost. In order to have a valid simulation, it is necessary to develop many fundamental components, such as a physics engine, a rendering engine, models for all of the sensors included in the robot, and all of the technical art and assets needed to simulate the scenarios in which the robot will operate. One way some roboticists are attempting to decrease the up-front development costs is by re-using popular video game engines such as Unity or Unreal to run the simulations. At first glance, this appears to be an attractive option for a variety of reasons:

- Video game engines have a lot of the same fundamentals needed for robotic simulation, such as graphics rendering pipelines and physics engines. In addition, they have generally been fine-tuned for performance and realism, as video game consumers have demanded more and more realistic behavior from their games.

- Video game engines are extremely customizable, making it easy to extend the engine with custom functionality.

- Many large video game engines, such as Unity and Unreal, offer an “Asset Store,” in which, for a nominal fee, users can download technical assets such as maps, art, or code plugins to add to their custom designs. For example, rather than taking the development time to create virtual flowers to place into the simulation, users can search “flowers” in the Unreal Store, and download a package that contains hundreds of different flowers that can simply be dragged and dropped into the scene. This saves vast amounts of development time, as months of work can be simply bought and downloaded. In fact, many of these packages contain realistic maps that (in theory) should be ready to go out-of-the-box, requiring no development effort at all!

One of the tasks that I am currently developing a robot for is the Seattle Robotics Society’s RoboMagellan competition. In this competition, robots need to be able to autonomously navigate between different waypoints in an unknown outdoor environment, while negotiating some light obstacles. In previous exercises, I’ve developed a simulation framework using the Unreal engine, UrdfSim. In this post I’ll take a look at how difficult it is to use this framework to simulate a real task, as well as some of the problems and caveats with using a video game engine discovered during the development process. By the end of the post, we’ll have a fully functional simulation environment for the RoboMagellan competition that can be used to validate control algorithms or collect training data for machine learning algorithms.

(n.b. if you’re just looking for the download links, scroll to the bottom of this page.)

Premade Maps

At first, the design of the simulation appeared straightforward. Go to the unreal store, find a few suitable maps, compile them with UrdfSim, and write the python scaffolding code to run the simulation. This approach quickly ran into a few problems. First, most of the packages available on the unreal store were not full-blown maps, but smaller collections of assets. For example, there might be a pack of textures, or a pack of meshes, or a pack of code plugins that could be downloaded. These assets are important, but the aren’t an entire level. Once the available packages had been filtered down to just the maps, additional problems were discovered:

- Too small. Many of the available maps were extremely small, and mainly served to show what assets were included in the pack. In addition, many of them had the edges of the map showing, which would cause simulation artifacts. For example, in the map below, the edge is clearly visible.

-

Too large. Of the remaining maps, some of them were too large. For example, some of the maps contained vast landscapes that spanned miles and miles of terrain. While nice, running these maps would be extremely and unnecessarily resource intensive, limiting the usefulness of the simulation.

-

Too uniform. One of the main value propositions of using simulation in the robotic design process is the ability to catch edge cases. In order to do this, the terrain needs to have enough variety to exercise all of the scenarios in which the robot may be found. Many maps were very uniform, e.g. a giant meadow. Although the map would be large, the extra space does not lead to new, interesting scenarios for the robot. For example, the below map is enormous (hundreds of square kilometers), but the texturing is very uniform, making most of that space redundant.

-

Unrealstic styling. Some of the maps had texturing and design that did not resemble the conditions that the robot would find itself in. For example, the textures may be cartoonish or futuristic, rendering the maps useless.

-

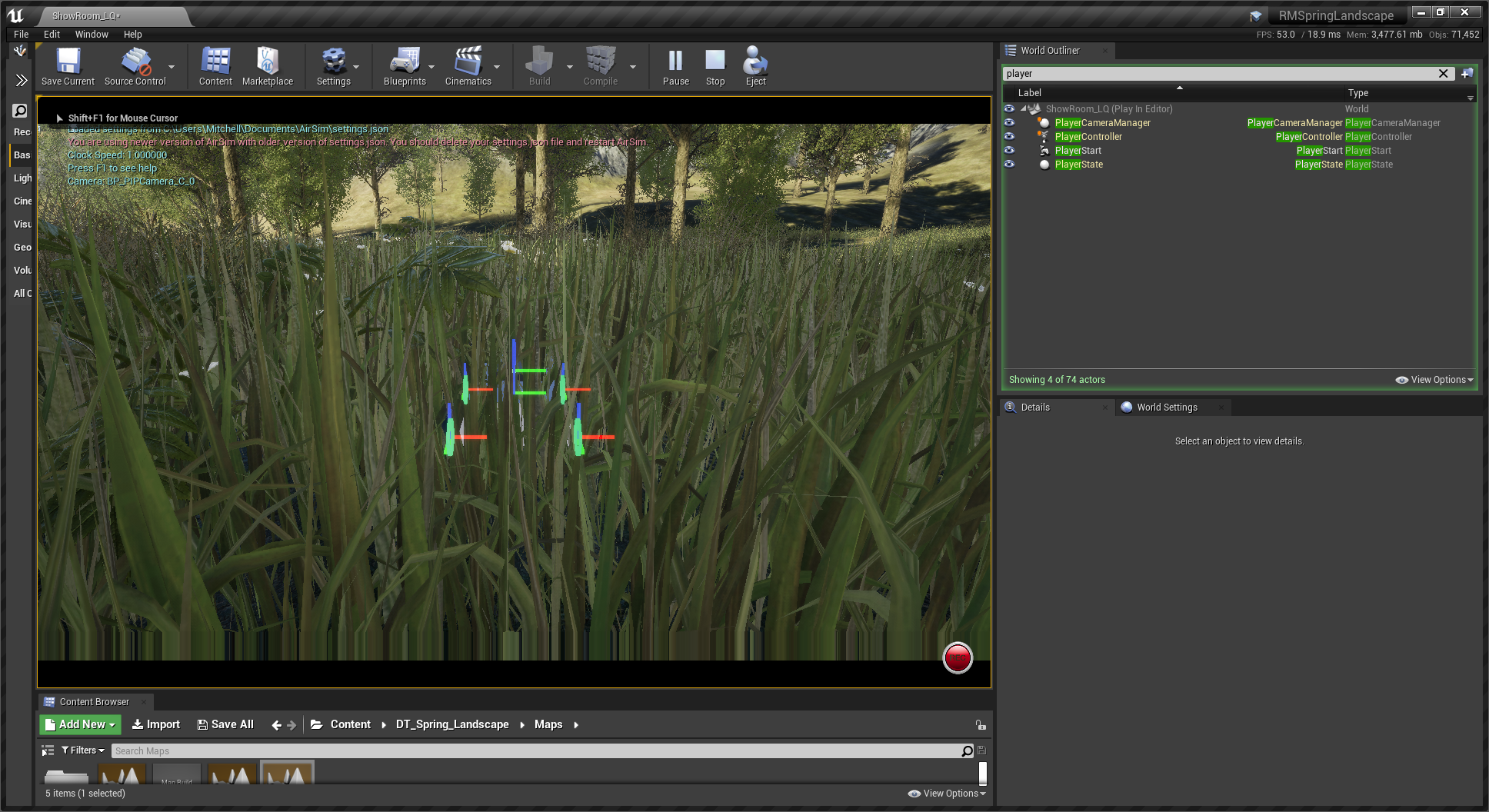

Not designed for robots. Most video games have a humanoid-sized character interacting in these worlds. This would lead to the inclusion of effects like grass or short shrubbery that would get in the way of the robotic systems. For example, the robot that is trying to be simulated in this application is a foot tall, which is often times shorter than the grass that is being used in these scenes. This means that all images or sensor readings taken from the bot will be of the grass, as in the image below.

- Expensive without preview. When looking at the asset packs, it is impossible to know the quality of the map beforehand. There is no mechanism for giving a “preview” - the pack needs to be purchased and downloaded before any of it is available for use. There is also no possibility of a refund. While each individual pack is not expensive (most are less than $100), multiple packs oftentimes need to be previewed in order to figure out which ones are useful.

- Lack of annotations. When training algorithms, it is generally needed to know information about the map. For example, if it is necessary to validate the path-following capabilities of the robot, then it is necessary to know the location of the path. However, this information is not provided in any of the maps previewed. Although these features are designed into the levels, it is impossible for the programmer to know where these features are, making it very difficult to use the map.

After reviewing the map packs above, there didn’t seem to be many that could be used as-is. Although there were some good candidates like the DevTon Spring Landscape or Modular neighborhood pack, none of them really fit the needs of this project. After much debating, the decision was made to create a custom map that could be used for simulation. The criteria for the map was as follows:

- The map should represent a large, outdoor scene, but not be too large as to be resource-intensive.

- The map should have multiple regions with varied textures. It should have a mountainous area, a woods area, water, meadows, lakes, beaches, and a river.

- The map should include natural foliage, but it should not unnecessarily interfere with the sensors of a shorter robot. E.g. most of the map should not be heavily covered in dense, tall grass.

- The map should allow for the simulation of multiple scenarios, as well as contain various density of obstacles.

- The map should be heavily annotated such that the coordinates and boundaries of all major obstacles and features are known and available via API.

Designing the custom map

Knowing very little about designing worlds for Unreal, the first step was to find a good tutorial. There aren’t many good, comprehensive text-based tutorials for Unreal, but there is a great video series by Shane Whittington (“GameDev Academy” on Youtube) that covers the basics available for free on Youtube. In addition to the freely available Unreal starter content, I used a few additional content packages in the project. First, I used the DevTon Spring Landscape asset pack for its foliage assets. There are a lot of trees, bushes, and flowers available in the asset pack. In addition, I used the Flowers and Nature pack for a few additional flower types.

The design of the map was centered around a river splitting the map in half, with three bridges of different styles crossing at different points. This would allow for a variety of interesting path planning exercises, as it would be possible to create a start and end point near each other, but separated by a long distance. In addition, some of the bridges were connected by paths, which could be used to support a path following scenario. Finally, the map was divided into three distinct biomes: forest, meadow, and beach. This would force any robot that is able to work in these different regions to be able to handle a wide variety of textures, enabling the development of more robust algorithms. In addition to the traditional outdoor scenes, a hedge maze was added in order to allow for the map to be used for advanced path planning scenarios.

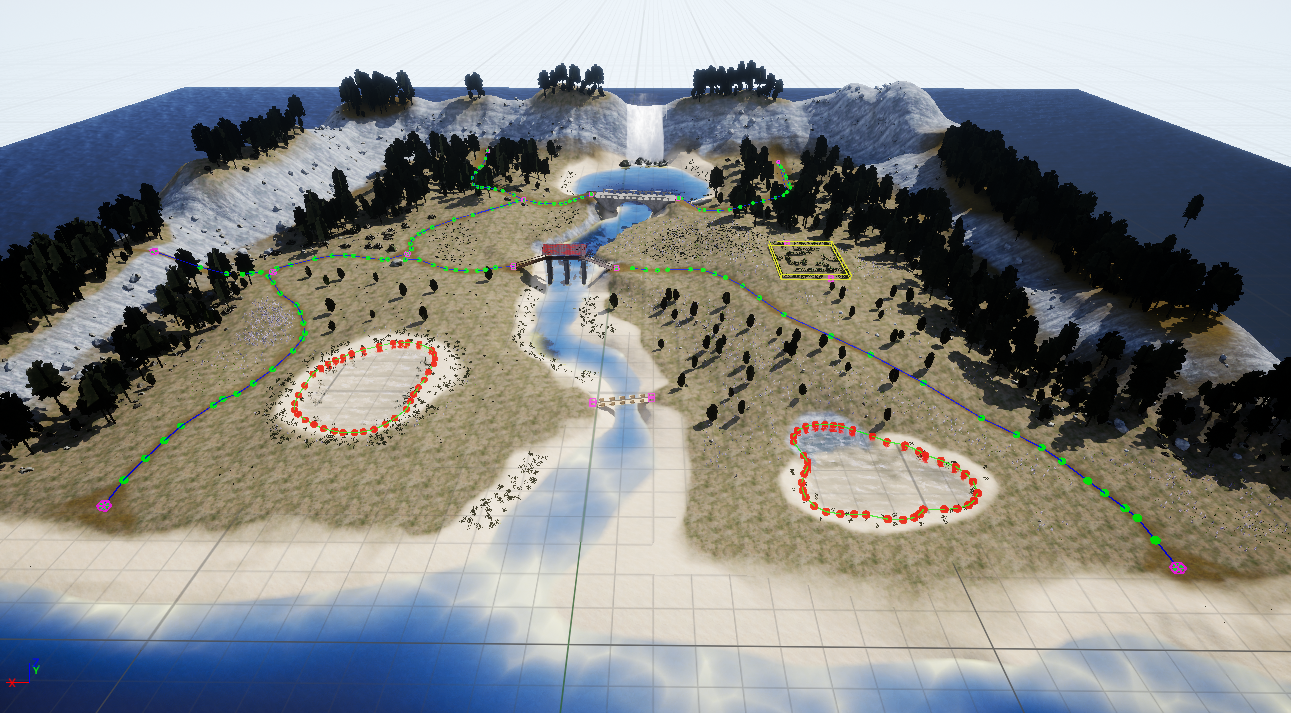

Once the map was designed and laid out, it was necessary to annotate the map. One of the problems with annotations is keeping the annotation in sync with map changes. For example, if a path’s location is changed in future iterations of the map, then it would be desirable for the annotations to change as well. In addition, it would be ergonomic to annotate the map using the drag-and-drop interface of the unreal editor rather than having to use some separate interface to annotate the map. The solution that is used contains two parts. First, a few actors are placed in the levels at key points. The actors have no collision and an invisible texture, so they do not impact gameplay. In addition the actors follow a specific naming convention that allows for easy identification later. Finally, the Unreal Engine python scripting plugin can be used to gather the locations of these actors and create the annotations. This pipeline is extremely easy-to-use, and provides immediate visual feedback on the quality of the annotations. Below shows a photo of the annotated map:

Once the map was developed, the development of the remaining simulation components is trivial. First, a python package was created to read in the simulation annotations. Then, a separate python package was created to handle the mechanics of running the RoboMagellan competition runs (e.g. spawning cones, measuring the elapsed time, etc). In order to develop this functionality, a few capabilities needed to be added to the engine, such as an API to spawn objects or an API to convert XYZ points to GPS waypoints. Finally, the entire project was packaged and uploaded to GitHub to be released. Here is a gif of a short competition run in the new simulator (note: the video appears to be a bit grainy when replayed on youtube)!

Additional thoughts on Game Engines for Robotic Simulation

Using game engine for simulating robot certainly comes with the benefits described in the introduction. However, there are a few caveats which should be taken into account when choosing this option:

- The components of a game engine are designed for video games.. This seems obvious, but becomes a problem when the goals of robotic simulation diverge from the goals of video games. A good example of this in the physics engine design. Generally, video game physics engines are designed to yield passable physics with excellent performance. This allows the games to run on lower-end devices. For robotic simulations, you generally have complex physical constraints that need to be simulated extremely accurately, which the stock physics engines can’t handle well. This will create a problem with no easy solution. Another example would be in the rendering engine - they are designed to produce beautiful graphics at reasonable framerates (e.g. 60 FPS). For many machine learning applications, however, you would prefer passable graphics at a high framerate, decreasing your training time. An example of a simulator that takes the latter approach would be Habitat-Sim from Facebook AI Research (FAIR).

- While assets are available, it is unlikely that manual design work will not need to be done.. At the very least, an annotation strategy will need to be developed. Generally, it is likely that a custom map will need to be developed in order to properly simulate the proper scenarios. The promise of “download and drop-in” does not seem to hold up in practice very well.

- The hardest and most expensive part of developing simulations is not the code, but the technical assets.. Good technical art is hard to come by, and designing it is not easy. There are a variety of tools like blender that need to be mastered in order to develop these assets. The freely-available assets either in the Unreal Store or on sites like TurboSquid are generally not usable in high-quality simulations. This is different from most other software projects, in which most of the development time is spent writing code.

- Invest in a good computer. The unreal engine is very resource intensive. Much of the development time was spent compiling shaders and compiling code, even on a high-end desktop machine.

Summary

In this post, we created a simulation for the SRS RoboMagellan competition leveraging the Unreal Engine and UrdfSim. Although the initial intention was to repackage an exiting asset, eventually a custom map was developed that will be used in exploring future concepts in robotics in this blog. During the development process, some caveats were discovered that show why using game engines for developing robotic simulations is not a shortcut without drawbacks.

Download links

- UrdfSim: The code for the UE4-based robotic simulation plugin. This can be compiled into your own custom maps to create your own scenarios.

- Three Bridges Annotated Unreal Map: A custom map built explicitly for robotic simulation. It comes pre-packaged with UrdfSim. For information on how to download the map, run the simulation, and use the annotations, view the README

- RoboMagellan Orchestrator: This python script handles the mechanics of running a robomagellan competition run. It allows you to set spawn points for each of the waypoints either deterministically or randomly, and will monitor and run the simulation according to the rules of the competition (e.g. spawn the robot, allow it to run for a period of time, record when waypoints are reached, and compute a final score). Given interest, this could even be used to create a “virtual” version of the competition, which could allow those without the means to build a robot a means to compete.